About UWORCS 2021

UWORCS stands for University of Western Ontario Research in Computer Science. UWORCS is the annual internal departmental student conference intended to give students the opportunity to practice presenting to academic audiences.

Your participation is needed to make this event a success. Please Email Amir Memari at amemari@uwo.ca for more details.

REGISTER NOW

Subjects presenters are participating in

Attendants are already registed upto this point of time

Presenters are already registed upto this point of time

KEYNOTE SPEAKER

Aleksander Essex

Aleksander Essex is an associate professor of software engineering and head of the Western Information Security and Privacy Research Lab. His work focuses on vulnerability and threat analysis of real-world voting technology and the new methods supporting evidence-based outcomes for electronic elections. He is frequently invited to share his findings with governments at home and abroad. As part of these efforts, he is currently helping establish Canada's first standards committee for online voting.

Talk title

Cryptography in the Ballot Box: A Closer Look at Microsoft’s ElectionGuard

Despite using paper ballots federally, Canada is quickly becoming a world leader in online voting adoption at the municipal level. However, our study of the 2018 Ontario municipal election shows troubling gaps between the legal principles and the cyber practice. With Ontario’s online voting seemingly headed toward an eventual democratic crisis, one of its most promising pathways to legitimacy involves the special use of cryptography. This talk will examine the technology behind Microsoft’s ElectionGuard, and how it could be used to provide Ontario cities with evidence supporting their online election outcomes.

Dr. Essex's keynote speech will take place on Tuesday, May 18th at 1:40AM. Please join us to welcome Dr. Essex to the 29th Annual Conference - UWORCS 2021.

-

01 Who can attend?

All computer science faculty, graduate students, and 4th-year undergraduate students are encouraged to attend.

-

02 Is there a

registration fee?

No registration fee.

-

03What sort of

research can be presented?

The more you care about a subject the better your talk will probably be. Choose something that you've personally worked on during your grad/undergrad thesis studies, or even a course project with a research flavour. You can even present ongoing research. UWORCS is a great opportunity to practice explaining whatever work you are most proud of.

-

04 How should I prepare my talk?

Presentations will be online via zoom. If you choose to give a talk, you can register for a 20 minute talk. Each talk is preceded by a 3 minute setup and followed by an additional 7 minute question/judging period.

-

05 Does this fulfill my yearly PhD seminar?

Yes. PhD students in their 3rd and 4th years can present their current research and have it count towards their yearly seminar requirement (692).

-

06 Will there be session prizes?

Yes, each oral/poster session has a 1st and 2nd place award including cash prizes. Short talks and long talks are judged together.

-

07 How does session judging/chairing work?

Session judges rank all talks/posters in a session, based mainly on presentation quality and clarity, and thus determine who wins session prizes. Judges also write down feedback that will be anonymized and forwarded to the presenter after the event is over. Session chairs announce each speaker and ensure that talks stay on schedule.

Talks subjects

UWORCS 2020 involves talks that are judged by faculty members and senior students and prizes are awarded to top presenters in a variety of categories including:

Bioinformatics

Computer Algebra

Computer Vision and Image Processing

Data Mining, Machine Learning and AI

Distributed Systems and Applications

Game Design, Graphics, HCI and Visualization Sciences

Software Engineering and Programming Languages

Theory of Computer Science

UWORCS 2020 Team

Amir Memari

Conference Chair

Kareem Jaradat

Web Master

Mohsen Shirpour

Volunteer

Alex Brandt

Volunteer

Andrew Bloch-Hansen

Volunteer

Duff Jones

Volunteer

Linxiao Wang

VolunteerPRESENTERS

-

Integer hull of parametric polytope

Linxiao WangComputing the integer hull of parametric polytope can be used in applications such as analysis, optimization, and parallelization of loop nests. To solve this problem, we first formally define the idea of the periodic polytope and present the proofs of its features. Then we propose a simple algorithm for the integer hull problem using the idea of the periodic polytope.

-

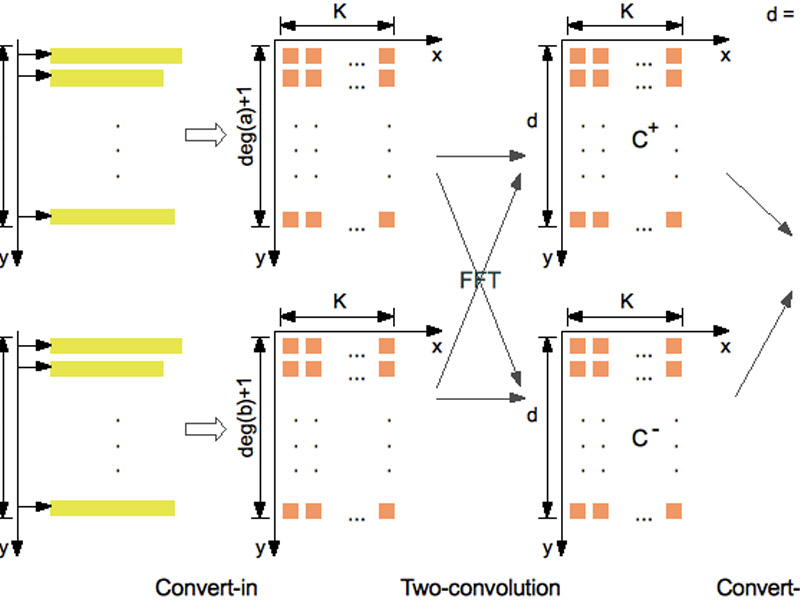

Modular and Speculative Schemes for Computing Subresultants

Mohammadali (Ali) AsadiSubresultants are one of the most fundamental tools in computer algebra. They are at the core of numerous algorithms including, but not limited to, polynomial GCD computations to polynomial system solving, and symbolic integration. When the subresultant chain of two polynomials is involved in a client procedure, not all polynomials of the chain, or not all coefficients of a given subresultant, may be needed. In this talk we discuss modular and speculative schemes that address this problem and show how they can be implemented efficiently in C language as part of the BPAS library with both minimizing arithmetic operations and memory accesses.

-

Multi Scale Identity-Preserving Image-to-Image Translation Network for Low-Resolution Face Recognition

Vahid Reza KhazaieState-of-the-art deep neural network models have reached near perfect face recognition accuracy rates on controlled high-resolution face images. However, their performance is drastically degraded when they are tested with very low-resolution face images. This is particularly critical in surveillance systems, where a low-resolution probe image is to be matched with high-resolution gallery images. super-resolution techniques aim at producing high-resolution face images from low-resolution counterparts. While they are capable of reconstructing images that are visually appealing, the identity-related information is not preserved. Here, we propose an identity-preserving end-to-end image-to-image translation deep neural network which is capable of super-resolving very low-resolution faces to their high-resolution counterparts while preserving identity-related information. We achieved this by training a very deep convolutional encoder-decoder network with a symmetric contracting path between corresponding layers. This network was trained with a combination of a reconstruction and an identity-preserving loss, on multi-scale low-resolution conditions. Extensive quantitative evaluations of our proposed model demonstrated that it outperforms competing super-resolution and low-resolution face recognition methods on natural and artificial low-resolution face data sets and even unseen identities.

-

OLED: One-Class Learned Encoder-Decoder Network with Adversarial Context Masking for Novelty Detection

John JewellNovelty detection is the task of recognizing samples that do not belong to the distribution of the target class. During training, the novelty class is absent, preventing the use of traditional classification approaches. Deep autoencoders have been widely used as a base of many unsupervised novelty detection methods. In particular, context autoencoders have been successful in the novelty detection task because of the more effective representations they learn by reconstructing original images from randomly masked images. However, a significant drawback of context autoencoders is that random masking fails to consistently cover important structures of the input image, leading to suboptimal representations - especially for the novelty detection task. In this paper, to optimize input masking, we have designed a framework consisting of two competing networks, a Mask Module and a Reconstructor. The Mask Module is a convolutional autoencoder that learns to generate optimal masks that cover the most important parts of images. Alternatively, the Reconstructor is a convolutional encoder-decoder that aims to reconstruct unperturbed images from masked images. The networks are trained in an adversarial manner in which the Mask Module generates masks that are applied to images given to the Reconstructor. In this way, the Mask Module seeks to maximize the reconstruction error that the Reconstructor is minimizing. When applied to novelty detection, the proposed approach learns semantically richer representations compared to context autoencoders and enhances novelty detection at test time through more optimal masking. Novelty detection experiments on the MNIST and CIFAR-10 image datasets demonstrate the proposed approach's superiority over cutting-edge methods. In a further experiment on the UCSD video dataset for novelty detection, the proposed approach achieves state-of-the-art results.

-

A Lightweight and Explainable Citation Recommendation System

Maxwell YinThe increased pressure of publications makes it more and more difficult for researchers to find appropriate papers to cite quickly and accurately. Context-aware citation recommendation, which can provide users suggestions mainly based on local citation contexts, has been shown to be helpful to alleviate this problem. However, previous works mainly use RNN models and their variance, which tend to be highly complicated with heavy-weight computation. In this paper, we propose a lightweight and explainable model that is quick to train and obtains high performance. Our model is based on a pre-trained sentence embedding model and trained with triplet loss. Quantitative results on the benchmark dataset reveal that our model achieves impressive performance with or without metadata. Qualitative evidence shows that our model pays different levels of attention to adequate parts of citation contexts and metadata, suggesting that our method is explainable and more trustable.

-

An “Echo State” Network Derived from the Drosophila Melanogaster Olfactory System

Jacob MorraThe main research question for this objective-based work is two-fold: (1) “Can we create a ‘from-the-ground-up’, biologically-derived neural network from the fruit fly olfactory system, and if so, does its architecture (sharpened over generations of evolutionary adaptation) house any useful or interesting properties (i.e. from a machine-learning perspective)?”; (2) “Is there a relationship between this network’s architecture and the functional capabilities (or observable behaviours) of the fruit fly (i.e. from a systems biology perspective)?” Herein we derive an “Echo State” Network (ESN) using the “hemibrain”, an Electron Microscopy (EM) volume of the fruit fly brain; specifically, we extract neuron data (IDs, connection data) from primary olfactory ROIs (Antenna Lobe [AL], Mushroom Body Calyx [CA], Lateral Horn [LH]) into a square weight vector. In order to obtain reasonable training times (during this phase of the work) we reduce our weight vector to only include those neurons from the right calyx; this truncated weight vector serves as the reservoir for our ESN — we refer to this ESN as the “FFNN (fruit fly neural network) ESN”. We train our FFNN ESN on a 3-class wine spoilage dataset for the purpose of classifying between labeled low, average, and high quality wines from 6-component input samples containing electronic nose resistance values. Using our FFNN ESN on 800 test samples, we observe that it performs better (in terms of accuracy) than a random 50-neuron reservoir, but not as well as a random reservoir of the same size; though, we do find that for low to intermediate sample sizes the FFNN ESN slightly outperforms both of its random counterparts. However, more analysis is required: first, using the full FFNN weight vector; and secondly, with a variety of different (and FFNN-relevant) datasets.

-

An approach to extract optimum feature set to identify abnormal traffic.

Sajal Saha"Cyber-attacks are increasing rapidly, so keeping pace with the increased usage of cyberspace is very crucial. DDoS (Distributed Denial of Services) is one of the most well-known digital threats, endangering the world economy. DDoS halts the host from serving the real traffic by overflowing with consistent undesirable requests. In this research work, we attempted to analyze the impact of Feature Selection (FS) in DDoS attack classification using both Machine Learning (ML) and Deep Learning (DL) models. "

-

Analyzing Citation Linkage for the Scientific Texts using Tree Transformer

Sudipta Singha RoyAdvances in the corresponding research area are reflected in research publications. Scientists also use citations in these research publications to bolster the presented research outcomes and depict the refinements that come with these findings, while often navigating the flow of knowledge to make the contents more understandable to the audience. A citation in the science domain refers to the source from which the information is derived, but it does not define the text span that is being referenced. This work develops a framework that can link the citations from the current research paper with the related cited text spans in the cited documentation. It will eventually relieve the readers of the pressure of reading all of the sentences in the cited documents while obtaining the necessary context information. This task has been formulated as a semantic similarity task where given a citation sentence and the cited documents, it tries to highlight the sentences which come with similar semantic meanings with the citation statement. Linear models like LSTM and its variants are already applied for this task. Scientific literatures come with a lot of phrases to represent chemical names. To capture the phrase level syntax and word level dependencies tree-structured neural networks are required. That’s why this work applies Tree-Transformer to analyze the citation linkage task with some interpretation of the outcome.

-

CLAR: Contrastive Learning of Auditory Representations

Haider Al-TahanRepresentation learning is a crucial component in the wide success of deep learning algorithms by disentangling compact independent high-level factors from low-level sensory data. Recently, contrastive self-supervised learning has been successful in learning rich visual representations by leveraging the inherent structure of unlabeled images. However, it is still unclear whether we could use a similar self-supervised approach to learn superior auditory representations. In this work, we expand on prior studies on contrastive self-supervised learning (SimCLR) to learn rich auditory representations. We evaluated the performance of our proposed framework on three different audio datasets from diverse domains (speech, music, and environmental sounds). Furthermore, we trained two family of deep residual neural networks, one type of networks were trained on raw audio signals while the other type utilized time-frequency audio features. In this work, we (1) introduced and evaluated the impact of various auditory data augmentations on predictive performance, (2) showed that training with time-frequency audio features substantially improves the quality of the learned representations compared to raw signals, and (3) demonstrated that simultaneous training with both supervised and contrastive losses improves the learned representations compared to self-supervised and supervised training. We illustrated that by combining all these methods and with substantially less labeled data, our framework (CLAR) achieves significant improvement on prediction performance compared to self-supervised (11.3% improvement) and supervised (1% improvement) approaches. Moreover, while the self-supervised approach converges to > 80% predictive performance within approximately 500 epochs, our framework converges to > 90% within approximately 30 epochs.

-

Explainable AI in Clinical Decision Support

Mozhgan SalimiparsaClinical decision support (CDS) systems are computer applications whose goal is to facilitate the decision-making process of clinicians. In recent years, CDSS has developed an interest in applying machine learning (ML) models to make predictions related to clinical outcomes. The limited interpretability of many ML models is a major barrier to clinical adoption. This challenge has sparked research interest in interpretable and explainable AI, commonly known as XAI. XAI methods are used to construct and communicate explanations of the predictions made by machine learning models so that end-users can interpret those predictions. However, these methods are not designed based on end-users' needs; rather, they are based on the developers’ intuitions of what a good explanation is. Furthermore, XAI methods are not tailored to the specific tasks that a user will undertake, nor are they tailored to the interface used to perform those tasks. To tackle these issues, we propose to develop a visual analytic tool to explain an ML model for clinical applications whose design will explicitly take into account the context of tasks and the needs of end-users.

-

Forward Transfer in Lifelong Learning

Xinyu YunLifelong learning (LL), a paradigm of learning tasks sequentially, is an important field in machine learning.Most of the LL research focuses on how to avoid catastrophic forgetting of old tasks when a new task is learned, moreover,another primary goal in LL is “forward transfer”, utilizing prior learned knowledge to obtain better performance for latertasks. In this report, papers appearing “forward transfer” from recent top conferences will be reviewed. Approaches of optimizing forward transfer in or potentially applied to a Lifelong Learning framework will be introduced based on two general directions: regularization-based, and dynamic architecture.

-

Hierarchical Reinforcement Learning for Decision Support in Health Care

Caro StricklandOptimal decision-making is critical within many organizations. A large number of these organizations are structured hierarchically but make sequential decisions (like health care, for example). Using data collected over time by these organizations, we have been able to successfully apply reinforcement learning (RL) to many sequential decision-making problems. In doing this, however, we are not taking advantage of the benefits of their hierarchical structures and the ways different layers affect each other, and thus are not able to learn optimal decision-making techniques. Hierarchical reinforcement learning (HRL) is a powerful tool for solving extended problems with spare rewards. HRL decomposes a RL problem into a hierarchy of subtasks to be solved individually using RL. Because of this, the fundamental concept of HRL applies nicely to our problem. Unfortunately, due to limitations in current research, classic HRL frameworks are not quite suitable for our problem. In our work, we plan to formalize a new HRL framework that is capable of building sequential decision-making support models using datasets collected from stochastic behavioral policies.

-

Stacking Classifiers on Different Feature Subsets to Reduce the Problem of Overlapping

Classes in Text Classification

Yasmen WahbaCorrect classification of customer support tickets or complaints can help companies to improve the quality of their services to the customers. One of the challenges in text classification is when certain categories tend to share the same vocabulary. This can result in misclassification by the machine learning algorithm used. The problem is worsened when the dataset is imbalanced. To address this issue, we propose a stacking algorithm based on combining classifiers that operate on different feature subsets, depending on those features that tend to improve the recall and the precision of the overlapped classes. In our approach, first, we train different linear and non-linear classifiers on the full feature set. Second, we use the chi2 test to determine the best feature set for all our pre-trained classifiers that improve the f1-score for the overlapped class(es). Finally, we train a two-layered stacked model composed of the best base learners obtained from the first step as layer-1 and combine it with a strong meta-learner for the second layer. We also propose a generic framework for classifying text documents. The experimental results on a real-world dataset from a large IT organization and a public Consumer Complaint database show an improvement in the overall accuracy as well as a reduction in the misclassification rate for the overlapped classes.

-

An Effective and Reliable Multi-Factor Authenticated Blockchain-Based OTA Update Framework for Connected Autonomous Vehicles

Sadia YeasminAccording to IHS Automotive, by 2022, more than $35 billion costings will be grown worldwide in the OEM industry regarding OTA software and firmware updates for autonomous vehicles, which was $2.7 billion in 2015. Following this tremendous ratio, it is clear that software and firmware updates via OTA have gained the topmost popularity for the automotive industry to achieve significant cost savings while providing better service quality. And the system must stay updated with all the latest software versions incorporated inside the complete in-vehicular design to remain integrated and feasible. However, as this leveraging mechanism of integrating and updating advanced software features enhances the vehicles' quality, both for manufacturers and consumers, it exposes the autonomous vehicles to critical privacy and security breaching threats parallelly. This work has introduced a decentralized and distributed blockchain-based OTA update framework for connected autonomous vehicles, ensuring improved driving performance, a cost-effective updating scheme for manufacturers, and glitch-free updates of the software, firmware. This approach would incentivize the users to feel more reliable and confident by ensuring that they are not bombarded with constant update notifications while driving and have adequate control over the manufacturer’s exchanged data. This analysis would provide an easy-to-implement and transparent algorithm showing how the system works while ensuring reliable and faster network performances, including multi-factor authentication. No vehicle would miss out on any new features right after it has been uploaded in the cloud or even if the cloud server faces any partial service interruption. Moreover, this efficient blockchain framework would suit any network size and ensure the same service quality whether the autonomous vehicle is running or standstill.

-

On Distributed Gravitational N-Body Simulations

Alex BrandtThe N-body problem is a classic problem involving a system of N discrete bodies mutually interacting in a dynamical system. At any moment in time there are N(N-1)/2 such interactions occurring. This scaling as N^2 leads to computational difficulties where simulations range from tens of thousands of bodies to many millions. Approximation algorithms, such as the famous Barnes-Hut algorithm, simplify the number of interactions to scale as NlogN. Even still, this improvement in complexity is insufficient to achieve the desired performance for very large simulations on computing clusters with many nodes and many cores. In this work we explore a variety of algorithmic techniques for distributed and parallel variations on the Barnes-Hut algorithm to improve parallelism and reduce inter-process communication requirements. Our MPI implementation of distributed gravitational N-body simulation is evaluated on a cluster of 10 nodes, each with two 6-core CPUs, to test the effectiveness and scalability of the aforementioned techniques.

-

Serverless SLA, Current state and directions

Mohamed ElsakhawyServerless computing is a recent cloud delivery model that enables developers to focus on code development while relying on the cloud provider to provision and operate the underlying layers of the cloud platform. Using serverless computing, developers submit code to the cloud provider that is executed when a triggering event is satisfied. While the model has a vast potential for constructing complex applications from a large number of asynchronous serverless functions, recent surveys illustrate the dominance of simple trigger-response workflows in the serverless model. A challenge that faces wide-scale adoption of the serverless model in constructing complex applications is the lack of Service Level Agreements ""SLA"" for serverless platforms. None of the major commercial serverless providers offer APIs for assessing the service level performance of their platforms.In this presentation, we highlight the challenges facing the definition of serverless SLA and its current state. We then present our proposed framework for defining, and assessing compliance with, serverless SLA.

-

Smart Home Energy Visualizer

Kareem JaradatWhile technology advancements are continuously improving the energy efficiency of household appliances, energy consumption analysis and providing feedback to consumers on this analysis remains a critical issue in ensuring the effectiveness of such improvements. Visual feedback is a promising technique in promoting energy conservation by applying Demand Response in Smart Home Energy Management Systems (SHEMS). In this paper, we propose Smart Home Energy Visualization system (SHEV), a SHEM subsystem that consists of three components: Appliance Profile Detector with XCorrelation (APDX) that monitors the activation of household appliances, Operation Modes Identification using Cycles Clustering (OMICC) to identify the operation modes used, and the visualizer to represent the appliance usage related information to the user using Concentric Circles Representation (CCR). This visualization assists the consumer in applying Demand Response (DR) by better understanding the appliance usage so that consumer make sense of the consumption, hence better decision making in energy conservation.

-

From Theory to Design: Using Interactive Visualization Tools to Support Complex Learning of Ontological Space

Jonathan DemeloOntology datasets, which encode the expert-defined complex objects mapping the entities, relations, and structures of a domain ontology, are increasingly being integrated into the performance of challenging knowledge-based tasks. Yet, it is hard to use ontology datasets within our tasks without first understanding the ontology which it describes. Using visual representation and interaction design, Interactive Visualization Tools (IVTs) can help us learn and develop our understanding of unfamiliar ontologies. After a review of existing tools which visualize ontology datasets, we find that current design practices struggle to support learning tasks when attempting to build understanding of the ontological spaces within ontology datasets. During encounters with unfamiliar spaces, our cognitive processes align with the theoretical framework of cognitive map formation. Furthermore, designing encounters to promote cognitive map formation can improve our performance during learning tasks. In this talk, we present a brief introduction to IVT design concepts such as visual representation, interaction, and cognitive load. We then discuss the cognitive map formation of spaces and the types of cognitive activities we perform when encountering spaces like ontologies. From this, we summarize the high-level criteria for designing IVTs to support complex learning of ontological space and the generalized component types used in existing IVTs for these tasks. We conclude with a brief overview of PRONTOVISE, our novel multi-modal IVT designed to satisfy the necessary criteria to support complex learning of ontological space.

-

Leveraging Reinforcement Learning and WaveFunctionCollapse for Improved Procedural Level Generation

Mathias BabinWe will be presenting a novel approach to training Reinforcement Learning (RL) agents to serve as heuristics for the WaveFunctionCollapse (WFC) algorithm in the production of procedurally generated video game levels. While the WFC algorithm's original minimal entropy heuristic is sufficient for constructing levels that look visually appealing, it is often the case that these levels suffer when playability is concerned. The approach presented in this work involves replacing this heuristic with a set of deep neural networks trained using RL to direct the algorithm in the construction of playable levels for the original Super Mario Bros. (SMB). We evaluate the performance of our models using a game-playing A* agent provided by the Mario AI Competition Framework and designate a simple reward function which reflects the quality of generated levels based on this agent’s ability to successfully navigate them. Results using this approach show an increase in the percentage of playable levels generated using our learned heuristics over those using minimal entropy.

-

Developing a New Approach to Synchronization Free Parallel Stochastic Gradient Descent

Christopher StojanovskiCurrent versions of parallelized stochastic gradient descent utilizing lock free approaches are plagued by race conditions and false sharing. This results in wasted operations as well as poor speed in denser datasets. Hence, there is a need to develop this algorithm further, in a way that will improve processing speeds. Here, we discuss a proposed approach that involves splitting training data equally amongst processors in order to reduce race conditions and false sharing. This will be done by first creating subsets of sparse datasets that minimize same parameter usage between subsets, reducing race conditions. To reduce false sharing, parameters in shared memory will be sorted to separate commonly used parameters from being adjacent to each other. With these alterations, our hope is to develop a faster, more robust version of parallelized stochastic gradient descent.

-

High Multiplicity Strip Packing

Andrew Bloch-HansenWe consider the high multiplicity strip packing problem: given k distinct rectangle types, where each rectangle type has nk rectangles with width 0 < wk <=1 and height 0 < hk <=1 , we must pack these rectangles into a strip of width 1, without rotating or overlapping the rectangles, such that the total height of the packing is minimized. We review our previous algorithm for when k=3, and we present an improved algorithm for the same case.

Talks Schedule

The following is the talks schedule for the 3 sesstions

Results

The list of UWORCS2021 winners have awarded with certeficates and cash prizes